Artificial intelligence (AI) is encroaching on human intelligence, one step at a time, causing people to wonder whether the AI machines will eventually become sentient and take control of our world. As our species increasingly embraced the power of rational thinking, we began to develop machines that could simulate rational thought. Beginning at first as an adjunct to human intelligence, the field of artificial intelligence expanded its purview to create computers that could outperform humans in chess, Jeopardy! and a host of other cerebral challenges. Some computer scientists now believe that quantum computers may eventually yield offspring of superior intelligence, leading to a sequence of generations of computers that far surpass their intellectually inferior human creators. Those who ascribe to this belief called “hard AI” argue that computers may ultimately develop emotions and identities, evolving in a reverse sequence to the evolution of human cognition (i.e. rational to emotional) and culminating in a machine like HAL, depicted in the 1968 film 2001: A Space Odyssey.

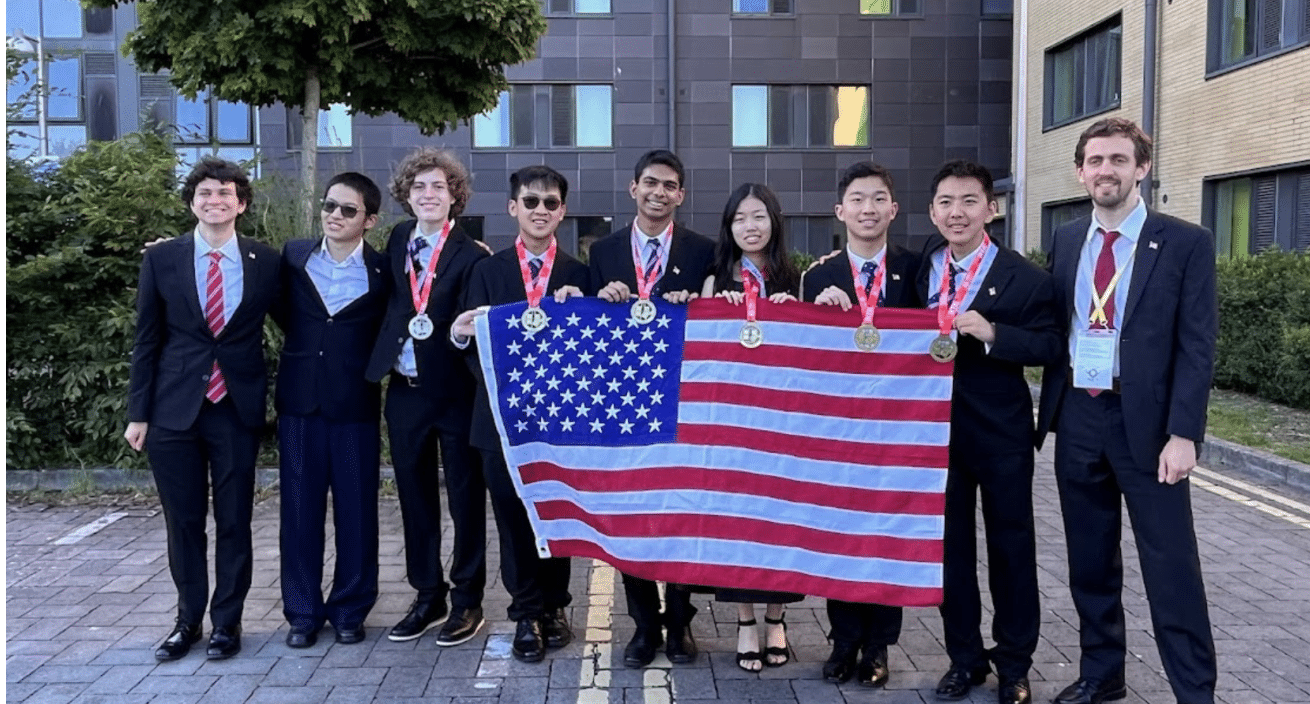

On September 12, just two days ago, Google DeepMind announced that their combined AI systems, AlphaProof and AlphaGeometry 2 solved four out of six problems from the 2024 International Mathematical Olympiad (IMO)– a score that qualifies as a silver medal. (See link) The problem that was identified as the hardest of the six, was solved by AlphaProof and only five of the human contestants. Does this mean that AI is approaching the problem-solving ability of the greatest mathematical problem-solvers on the planet?

AlphaProof uses a mathematical language known as Lean that scans millions of mathematical proofs to learn the logical sequences underpinning their structure. It then proceeds to generate its own solutions to increasingly difficult problems, using techniques based on these mathematical procedures–mimicking the processes that mathematicians apply in structuring proofs. When given a particular problem, it generates several logical sequences that may or may not be valid proofs, and then uses Lean to test the validity of each sequence, declaring a proof when a valid sequence is found. Each proof that is found and verified is used to reinforce AlphaProof’s language model, enhancing its ability to solve subsequent, more challenging problems. Problem 2 on the 2024 IMO Competition is given below.

Problem 2: Find all positive integer pairs (a, b), such that there exist positive integers g, N, such that

gcd(an + b, bn + a) = g holds for all integers n ≥ N.

(In the problem above, “gcd” stands for “greatest common divisor of”)

Google revealed that, while AlphaProof solved two algebra and one number theory problem, and AlphaGeometry 2 solved the geometry problem, it was unable to solve the two problems in combinatorics. Furthermore, it took about 3 days for AlphaProof to solve one of the problems.

Providing another perspective on the achievement of the Google DeepMind models, mathematician Sir Timothy Gowers stated:

The main qualification is that the program needed a lot longer than the human competitors—for some of the problems over 60 hours—and of course much faster processing speed than the poor old human brain. …If the human competitors had been allowed that sort of time per problem they would undoubtedly have scored higher.

Gowers also mentioned that although the software did the core mathematical “reasoning” the problems had to be converted by Lean into a form that the software could process. When asked whether this achievement had implications for mathematical research, Gowers opined, “Are we close to the point where mathematicians are redundant? It’s hard to say. I would guess that we’re still a breakthrough or two short of that.”

When you are typing a response to an email, you might write, “looking forw…” and the auto-response AI completes your sentence with “looking forward to seeing you.” This may create the impression that the AI understands your conversation. However, AI has merely sampled millions of sentences that begin with “looking forw…” and filled in the most common completion. While AlphaProof and AlphaGeometry 2 are much more sophisticated than statement completion software, it doesn’t “understand” the content in the way that humans do. As time unfolds, we may be able to determine the difference between artificial intelligence and human intelligence. In the meantime, we can observe the ongoing competition between organic and inorganic “intelligence,” and in the process come to understand what we mean by the word “intelligence.”