SD stands for “standard deviation.” It is a measure of how much the data deviates from the mean. For example, in most standard IQ tests, the mean IQ is 100 and the standard deviation is 15. Performance on IQ tests is closely approximated by a bell-shaped curve such that about 2/3 of the population have an IQ that is within one-standard deviation from the mean, i.e., between 85 and 115.

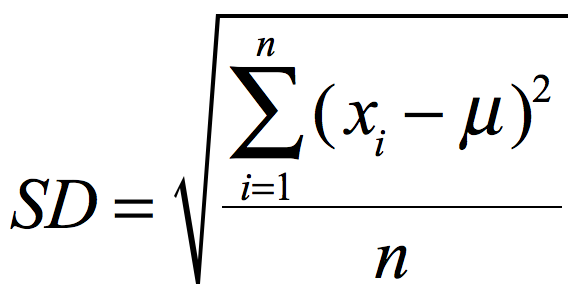

The standard deviation in a set of data {x1, x2, … xn} is obtained by subtracting the mean (denoted by Greek letter mu) from each element in the set, squaring all these differences and then summing all these squares, and dividing by n, the number of elements in the data set. Upon taking the square root of this sum, we obtain the standard deviation. It sounds complicated when described without the mathematical notation, as shown below, but computers have programs that do this automatically.